Overview

Icarus is a mecanum drive open-source project created for students or hobbyists to use as a base to gain experience with ROS2, Lidar, SLAM, and autonomous navigation and control.

This was a project where I personally led a team of 3 total engineers to create this robot, and the following sections will detail the portions that I took ownership of.

The full project be found here.

Design & Fabrication

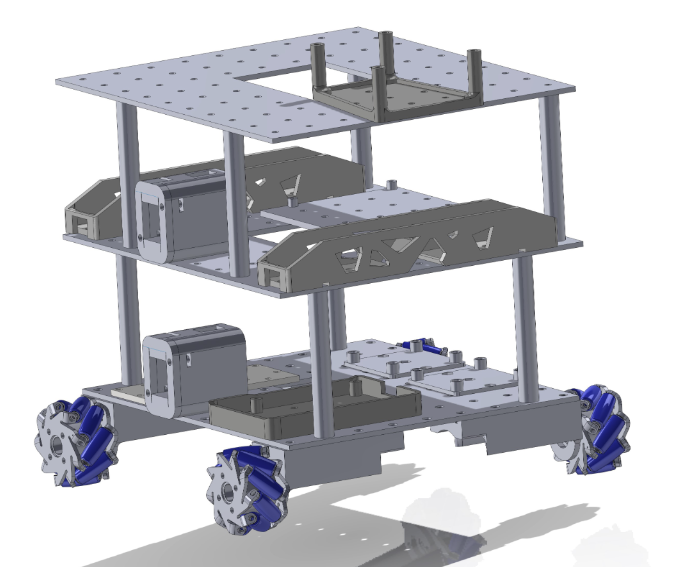

I took design inpsiration from the popular turtlebot research robot. I believed that its modular design intent for quick swapping of sensors and components

allowed for a high degree of user customization.Similar to turtlebot, the core identity for this robot was modularity through a varying amount of

"stacking" levels, and multiple mounting feature locations for full control over component position and configuration.

The assembly was entirely 3D printed, so all components were simple shapes to ensure ease of manufacturing, and all mating compenents could be assembled using 1 tool head.

CAD Assembly

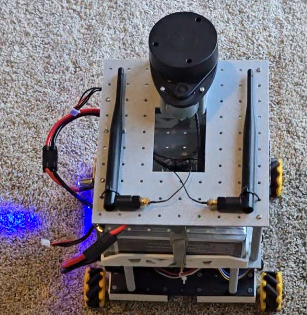

Assembly - No Lidar

Control & Software

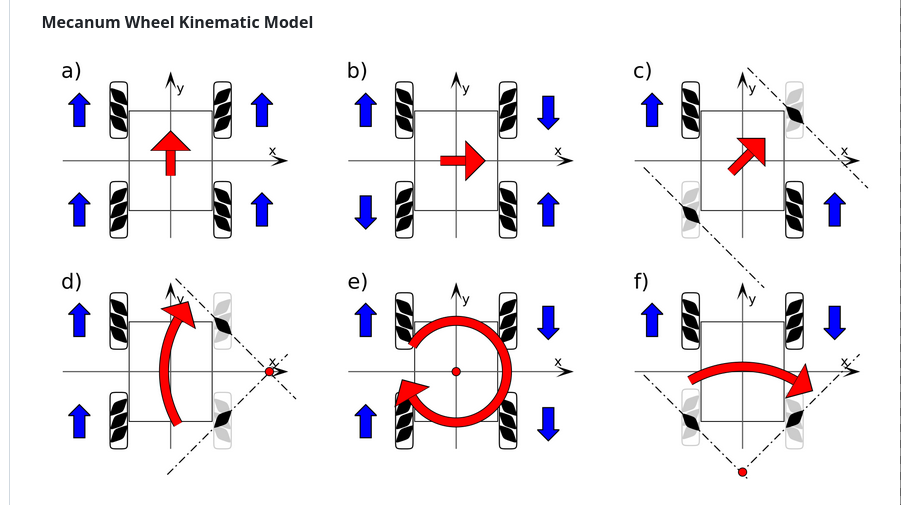

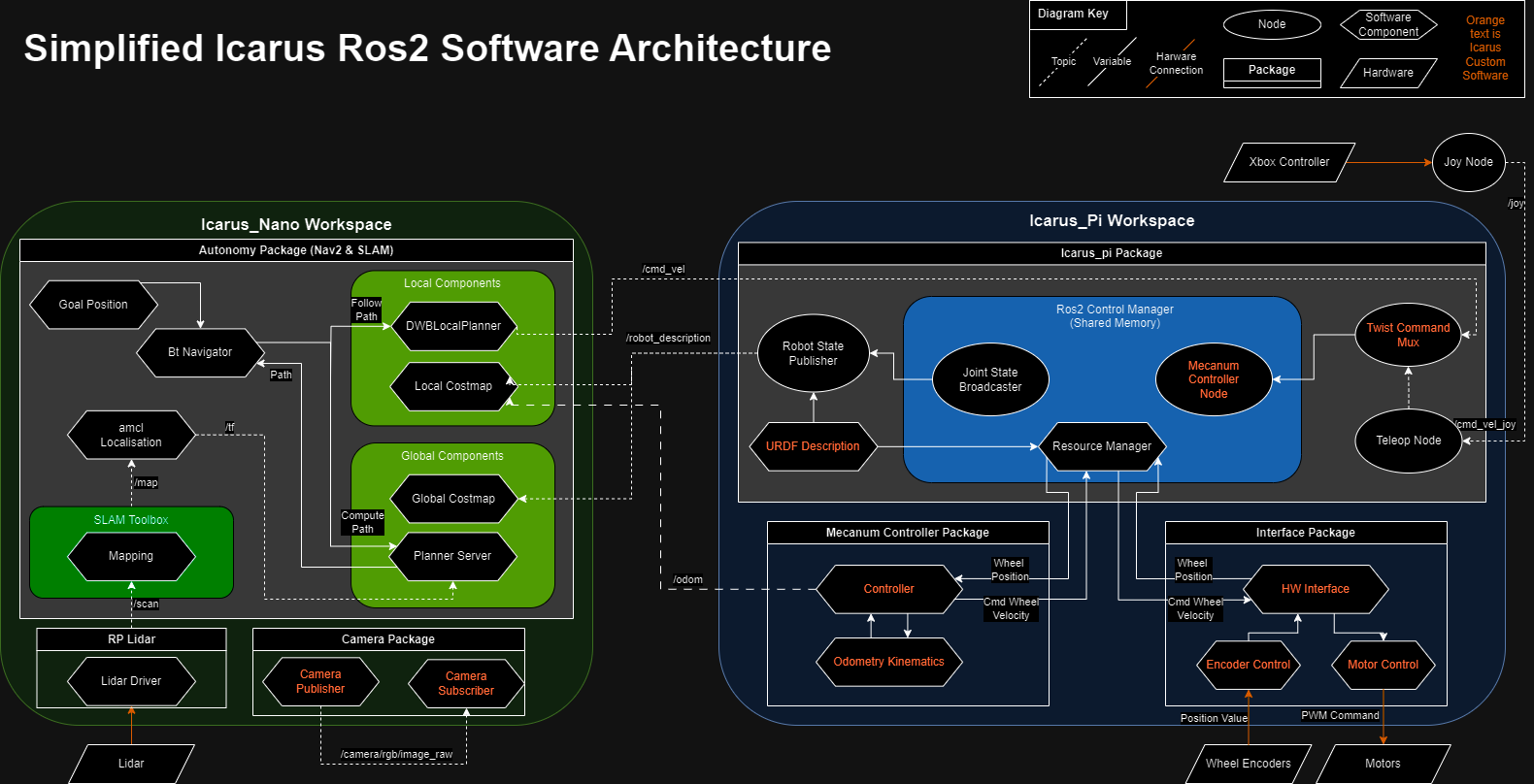

The ROS2 middleware was the core software used for this project. Using ROS2, I set out to design the forward and inverse kinematic control schemes for mecanum wheels using C++, the odometry setup that would later be used by our SLAM and navigation stacks, the simulation environment using Gazebo, and the PID control loop for the motors.

Mecanum Movement Visual

High Level Software Diagram of ROS2 package interactions

Testing

To ensure system integrity, multiple tests were conducted per subsystem before slowly being integrated into the main assembly. I personally took time in ensuring that forward kinematics controller the odometry stack behaved correctly before implementing into the main assembly.

Motor response after PID implementation and tuning

Results

Once all subsystems were validated, the end result was a robot that could switch between remote controlled and autonomous modes while possessing the capability of exploring and mapping new areas without the need for user input

Completed System